From Problem Statement to RQ by Abraham S.Fischler

What are the most common weakness formulating research question?

Objectives must always be set after having formulated a good research question. After all, they are to explain the way in which such question is going to be answered. Objectives are usually headed by infinitive verbs. (Refer Bloom Taxanomy/Revised Bloom Taxanomy)

It can be difficult to develop realistic research objectives. There are common pitfalls such as the scope being too broad, not including enough detail, being too simplistic, being too ambitious, etc. Use these S.M.A.R.T. guidelines to try and develop your objectives:

Example

Title: An investigation into the student use of e-books at Bolton University.

Aims: Many academic libraries have expanded their library provision by the acquisition of e-books. Despite this strategic direction, the literature reveals that relatively little is known about student perceptions and attitudes towards e-books. Consequently, this research aims to narrow this research gap and conduct empirical research into student perceptions towards e-books and their frequency of use. The results will be used to provide recommendations to library management to improve the quality of service provision regarding e-books.

Research Objectives: The above aim will be accomplished by fulfilling the following research objectives:

References

A succinct discussion of the revisions to Bloom’s classic cognitive taxonomy by Anderson and Krathwohl and how to use them effectively

Source taken from: http://thesecondprinciple.com/teaching-essentials/beyond-bloom-cognitive-taxonomy-revised/

Who are Anderson and Krathwohl? These gentlemen are the primary authors of the revisions to what had become known as Bloom’s Taxonomy — an ordering of cognitive skills. (A taxonomy is really just a word for a form of classification.) This taxonomy had permeated teaching and instructional planning for almost 50 years before it was revised in 2001. And although these crucial revisions were published in 2001, surprisingly there are still educators who have never heard of Anderson and Krathwohl or their important work in relation to Bloom’s Cognitive Taxonomy. Both of these primary authors were in a perfect position to orchestrate looking at the classic taxonomy critically. They called together a group of educational psychologists and educators to help them with the revisions. Lorin Anderson was once a student of the famed Benjamin Bloom, and David Krathwohl was one of Bloom’s partners as he devised his classic cognitive taxonomy.

Here in the United States, from the late 1950s into the early 1970s, there were attempts to dissect and classify the varied domains of human learning – cognitive (knowing, or head), affective (emotions, feelings, or heart) and psychomotor (doing, or kinesthetic, tactile, haptic or hand/body). The resulting efforts yielded a series of taxonomies for each area. The aforementioned taxonomies deal with the varied aspects of human learning and were arranged hierarchically, proceeding from the simplest functions to those that are more complex. Bloom’s Cognitive Taxonomy had been a staple in teacher training and professional preparation for almost 40 years before Anderson and Krathwohl instituted an updated version. An overview of those changes appear below.

While all of the taxonomies above have been defined and used for many years, there came about at the beginning of the 21st century in a new version of the cognitive taxonomy, known commonly before as Bloom’s Taxonomy. You can also search the Web for varied references on the other two taxonomies — affective or psychomotor. There are many valuable discussions on the development of all the of the hierarchies, as well as examples of their usefulness and applications in teaching. However, it is important to note that in a number of these discussions, some web authors have mislabeled the affective and psychomotor domains as extensions of Bloom’s work. These authors are in grave error. The original cognitive domain was described and published in 1956. While David Krathwohl was one of the original authors on this taxonomy the work was named after the senior or first author Benjamin Bloom. The affective domain was not categorized until 1964 and as David Krathwohl was the lead author on this endeavor, it should bear his name, not Bloom’s. Bloom had nothing to do with the psychomotor domain and it was not described or named until the first part of the 1970s. There are 3 versions of this taxonomy by 3 different authors — Harrow (1972); Simpson (1972); and Dave (1970) See full citations below.

The following chart includes the two primary existing taxonomies of cognition. Please note in the table below, the one on the left, entitled Bloom’s, is based on the original work of Benjamin Bloom and others as they attempted in 1956 to define the functions of thought, coming to know, or cognition. This taxonomy is almost 60 years old. The taxonomy on the right is the more recent adaptation and is the redefined work of Bloom in 2000-01. That one is labeled Anderson and Krathwohl. The group redefining Bloom’s original concepts, worked from 1995-2000. As indicated above, this group was assembled by Lorin Anderson and David Krathwohl and included people with expertise in the areas of cognitive psychology, curriculum and instruction, and educational testing, measurement, and assessment. The new adaptation also took into consideration many of Bloom’s own concerns and criticisms of his original taxonomy.

As you will see the primary differences are not in the listings or rewordings from nouns to verbs, or in the renaming of some of the components, or even in the re-positioning of the last two categories. The major differences lie in the more useful and comprehensive additions of how the taxonomy intersects and acts upon different types and levels of knowledge — factual, conceptual, procedural and metacognitive. This melding can be charted to see how one is teaching at both knowledge and cognitive process levels. Please remember the chart goes from simple to more complex and challenging types of thinking.

| Bloom’s Taxonomy 1956 | Anderson and Krathwohl’s Taxonomy 2001 | |||

1. Knowledge: Remembering or retrieving previously learned material. Examples of verbs that relate to this function are:

|

1. Remembering:

Recognizing or recalling knowledge from memory. Remembering is when memory is used to produce or retrieve definitions, facts, or lists, or to recite previously learned information. |

|||

2. Comprehension: The ability to grasp or construct meaning from material. Examples of verbs that relate to this function are:

|

2. Understanding:

Constructing meaning from different types of functions be they written or graphic messages or activities like interpreting, exemplifying, classifying, summarizing, inferring, comparing, or explaining. |

|||

3. Application: The ability to use learned material, or to implement material in new and concrete situations. Examples of verbs that relate to this function are:

|

3. Applying:

Carrying out or using a procedure through executing, or implementing. Applying relates to or refers to situations where learned material is used through products like models, presentations, interviews or simulations. |

|||

4. Analysis: The ability to break down or distinguish the parts of material into its components so that its organizational structure may be better understood. Examples of verbs that relate to this function are:

|

4. Analyzing:

Breaking materials or concepts into parts, determining how the parts relate to one another or how they interrelate, or how the parts relate to an overall structure or purpose. Mental actions included in this function are differentiating, organizing, and attributing, as well as being able to distinguish between the components or parts. When one is analyzing, he/she can illustrate this mental function by creating spreadsheets, surveys, charts, or diagrams, or graphic representations. |

|||

5. Synthesis: The ability to put parts together to form a coherent or unique new whole. Examples of verbs that relate to this function are:

|

5. Evaluating:

Making judgments based on criteria and standards through checking and critiquing. Critiques, recommendations, and reports are some of the products that can be created to demonstrate the processes of evaluation. In the newer taxonomy, evaluating comes before creating as it is often a necessary part of the precursory behavior before one creates something. |

|||

6. Evaluation: The ability to judge, check, and even critique the value of material for a given purpose. Examples of verbs that relate to this function are:

|

6. Creating:

Putting elements together to form a coherent or functional whole; reorganizing elements into a new pattern or structure through generating, planning, or producing. Creating requires users to put parts together in a new way, or synthesize parts into something new and different creating a new form or product. This process is the most difficult mental function in the new taxonomy. |

Table 1.1 – Bloom vs. Anderson/Krathwohl

________________________________________________________________

(Diagram 1.1, Wilson, Leslie O. 2001)

(Diagram 1.1, Wilson, Leslie O. 2001)

Note: Bloom’s taxonomy revised – the author critically examines his own work – After creating the cognitive taxonomy one of the weaknesses noted by Bloom himself was that there is was a fundamental difference between his “knowledge” category and the other 5 levels of his model as those levels dealt with intellectual abilities and skills in relation to interactions with types of knowledge. Bloom was very aware that there was an acute difference between knowledge and the mental and intellectual operations performed on, or with, that knowledge. He identified specific types of knowledge as:

Levels of Knowledge – The first three of these levels were identified in the original work, but rarely discussed or introduced when initially discussing uses for the taxonomy. Metacognition was added in the revised version.

(Summarized from: Anderson, L. W. & Krathwohl, D.R., et al (2001) A taxonomy for learning, teaching and assessing: A revision of Bloom’s taxonomy of educational objectives. New York: Longman.)

One of the things that clearly differentiates the new model from that of the 1956 original is that it lays out components nicely so they can be considered and used. Cognitive processes, as related to chosen instructional tasks, can be easily documented and tracked. This feature has the potential to make teacher assessment, teacher self-assessment, and student assessment easier or clearer as usage patterns emerge. (See PDF link below for a sample.)

As stated before, perhaps surprisingly, these levels of knowledge were indicated in Bloom’s original work – factual, conceptual, and procedural – but these were never fully understood or used by teachers because most of what educators were given in training consisted of a simple chart with the listing of levels and related accompanying verbs. The full breadth of Handbook I, and its recommendations on types of knowledge, were rarely discussed in any instructive or useful way. Another rather gross lapse in common teacher training over the past 50+ years is teachers-in-training are rarely made aware of any of the criticisms leveled against Bloom’s original model.

Please note that in the updated version the term “metacognitive” has been added to the array of knowledge types. For readers not familiar with this term, it means thinking about ones thinking in a purposeful way so that one knows about cognition and also knows how to regulate one’s cognition.

Factual Knowledge is knowledge that is basic to specific disciplines. This dimension refers to essential facts, terminology, details or elements students must know or be familiar with in order to understand a discipline or solve a problem in it.

Conceptual Knowledge is knowledge of classifications, principles, generalizations, theories, models, or structures pertinent to a particular disciplinary area.

Procedural Knowledge refers to information or knowledge that helps students to do something specific to a discipline, subject, or area of study. It also refers to methods of inquiry, very specific or finite skills, algorithms, techniques, and particular methodologies.

Metacognitive Knowledge is the awareness of one’s own cognition and particular cognitive processes. It is strategic or reflective knowledge about how to go about solving problems, cognitive tasks, to include contextual and conditional knowledge and knowledge of self.

*A comprehensive example from the book is provided with publisher permission athttp://www.scribd.com/doc/933640/Bloom-Revised

Anderson, L. W. and Krathwohl, D. R., et al (Eds..) (2001) A Taxonomy for Learning, Teaching, and Assessing: A Revision of Bloom’s Taxonomy of Educational Objectives. Allyn & Bacon. Boston, MA (Pearson Education Group) **There is a newer (2013), abridged, less expensive version of this work.

Bloom, B.S. and Krathwohl, D. R. (1956) Taxonomy of Educational Objectives: The Classification of Educational Goals, by a committee of college and university examiners. Handbook I: Cognitive Domain. NY, NY: Longmans, Green

Krathwohl, D. R. (2002) A Revision of Bloom’s Taxonomy. (PDF) in Theory into Practice. V 41. #4. Autumn, 2002. Ohio State University. Retrieved @

The Anderson/Krathwohl text has numerous examples of how these concepts can be used for K-12 teachers. Since I have used this material in my teaching (a special topics graduate course on taxonomies and their uses entitled Beyond Bloom’s,) and have also presented on this topic in several national conferences, I have artifacts and examples of how these revisions can be used effectively in college teaching. While I have a link above to an artifact, to be fully understood you might need to view the original assignment and the supportive documents. I would be happy to provide those and discuss them more fully. I am always happy to share information with other educators.

Originally published in ED 721 (2001) course handbook, and at:

http://www4.uwsp.edu/education/lwilson/curric/newtaxonomy.htm (2001, 2005), revised 2013

Assessment is the process of objectively understanding the state or condition of a thing, by observation and measurement. Assessment of teaching means taking a measure of its effectiveness. “Formative” assessment is measurement for the purpose of improving it. “Summative” assessment is what we normally call “evaluation.”

Evaluation is the process of observing and measuring a thing for the purpose of judging it and of determining its “value,” either by comparison to similar things, or to a standard. Evaluation of teaching means passing judgment on it as part of an administrative process.

Ideally, a fair and comprehensive plan to evaluate teaching would incorporate many data points drawn from a broad array of teaching dimensions. Such a plan would include not only student surveys, but also self-assessments, documentation of instructional planning and design, evidence of scholarly activity to improve teaching, and most importantly, evidence of student learning outcomes.

Reference: http://www.itlal.org/?q=node/93

(SYTHESIZING INFORMATION REFER TO; Topic 0007: Matrix Method for Literature Review – Approaches to Identify Research Gaps and Generate RQ)

Research Title: The Design and Developement of E-Portfolio for HIE’S in Social Sciences and Humanities

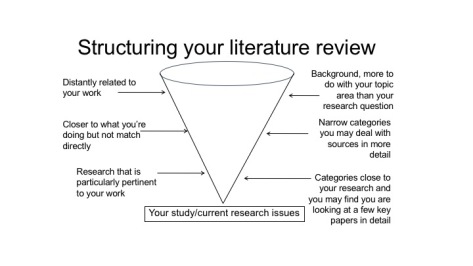

Adapted from RIDLEY, D 2008. The literature review: a step-by- step guide for students. London: Sage

Design and development research uses a wide variety of methodologies. Evaluation research techniques (both quantitative and qualitative) are also included in many studies. The list of tables represent the methods commonly used in design and development research;

Common Methods Employed in Design and Development Research

| Type of Research | Project Emphasis | Research Method Employed |

| Product & Tool Research | Comprehensive Design & Development Projects | case study, content analysis, evaluation, field observation, in-depth interview |

| Product & Tool Research | Phases of Design & Development | Case study, content analysis, expert review, , field observation, in-depth interview, survey |

| Product & Tool Research | Tool Development & Use | Evaluation, expert review, in-depth interview, survey |

| Model Research | Model Development | Case study, delphi, in-depth interview, literature review, survey, think-aloud methods |

| Model Research | Model Validation | Experimental, expert review, in-depth interview |

| Model Research | Model Use | Case study, content analysis, field observation, in-depth interview, survey, think-aloud methods |

The terms ‘mixed method research’ has been used to describe those studies that combine qualitative and quantitative methods. This is a way of using multiple approaches to answer given research questions.

Ross and Morisson (2004) support this trend when they take the position that qualitative and quantitative approaches are more useful when used together than when either is used alone and when combine, are likely to yield a richer and more valid understanding.

Johnson and Onwuegbuzie (2004) describe the areas of agreement between two advocates of both positions:

The fundamental agreement make mixed methods research not only practical in many situation, but also sound logically. However, in many design and development studies that employ multiple research methods are not mixing the qualitative and quantitative orientations. They are simply making use of a variety of similar strategies.

|

Aspect |

Description |

|

State of art |

Data-gathering stage that IMR methods have the biggest impact, as opposed to other stages and processes in the methodology of a piece of research, such as conceptualisation, interpretation, etc. (e.g.Hewson, 2008) IMR approaches which seemed viable at this time included interviews, focus groups and observational studies which used linguistic data, and procedures for implementing these methods were devised and piloted (e.g. interviews: Chen & Hinton, 1999; Murray & Sixsmith, 1998; focus groups: Gaiser, 1997; Tse, 1999; Ward, 1999; linguistic observation: Bordia, 1996; Workman, 1992; Ward, 1999).

|

|

Tools, technologies, procedures |

Synchronous approaches which gather data in real time

Asynchronous approaches is to follow them in ‘real-time’ e.g, subscribe to a group and follow an ongoing discussion |

|

Design issues and strategies |

|

|

Depth and reflexivity |

|

|

Levels of rapport |

Getting to know’ participants may help in maximising levels of confidence in the authenticity of the accounts they offer during the course of an interview. |

|

Anonymity, disclosure, social desirability |

|

|

Principles for good practice in qualitative internet-mediated interview and focus group research. |

|

|

|

|

Principles for good practice in qualitative internet-mediated observation and document analysis research. |

|

|

|

External Links

|

|

There are the different between Quantitative Research vs Qualitatitave Research;

|

Aspect |

Quantitative |

Qualitative |

|

Definition |

Concerned with discovering fact about social phenomena. | Concerned with understanding human behaviour form infromants perspective. |

|

Focus |

Assumes and fixed and measurable reality | Assumes a dynamic and negotiated reality |

|

Purpose |

|

|

|

Research Methods |

|

|

|

Strategy |

|

|

|

Analisis Data |

|

|

|

Data Interpretation |

Generalization | Summarization |

|

Problem |

|

|

|

Terminology |

|

|

|

Sampling Method |

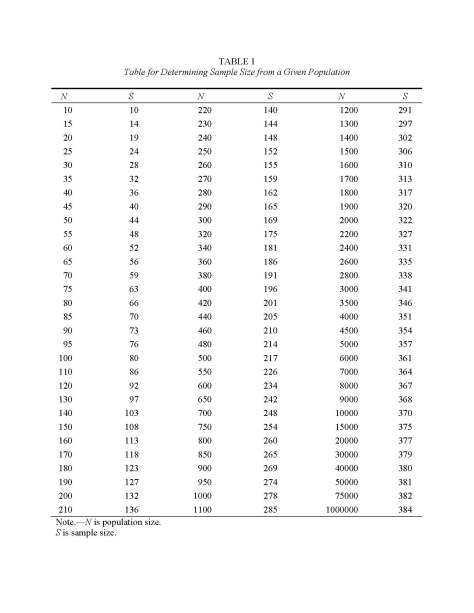

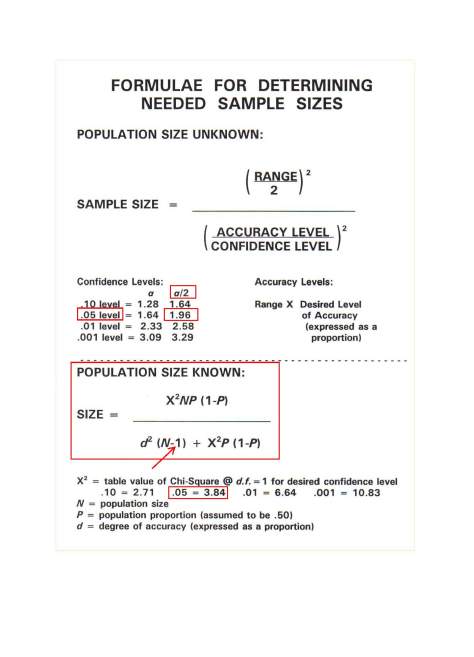

Probalility Sampling (Creswell, 2005), Kejcie and Morgan, 1970) | Non-Probalility Sampling (Miles and Huberman,1994 dan Creswell, 2005) |

“Need” refers to the gap or discrepancy between a present state (what is) and a desired state (what should be). The need is neither the present nor the future state; it is the gap between them.

“Target Group” Needs Assessments are focused on particular target groups in a system. Common target groups in education settings include students, parents, teachers, administrators, and the community at-large. Ideally, needs assessments are initially conducted to determine the needs of the people for whom the population or system. However, a “comprehensive” needs assessment often takes into account needs identified in other parts of a system. For example, a needs assessment might include the concerns of the “service providers” (e.g. teachers, guidance counselors, or school principals—the people who have a direct relationship with the service receivers) or “system issues” (e.g., availability of programs, services, and personnel; level of program coordination; and access to appropriate facilities).

A “Needs Assessment” is a systematic approach that progresses through a defined series of phases. Needs Assessment focuses on the ends (i.e., outcomes) to be attained, rather than the means (i.e., process). For example, reading achievement is an outcome whereas reading instruction is a means toward that end. It gathers data by means of established procedures and methods designed for specific purposes. The kinds and scope of methods are selected to fit the purposes and context of the needs assessment. Needs assessment sets priorities and determines criteria for solutions so that planners and managers can make sound decisions. Needs assessment sets criteria for determining how best to allocate available money, people, facilities, and other resources. Needs assessment leads to action that will improve programs, services, organizational structure and operations, or a combination of these elements.

Let’s take a quick look at general steps taken in a needs assessment.

Watkins describes analysis as “a process for breaking something down to understand its component parts and their relationships” (R. Watkins, personal communication, June 13, 2016). As to concluded, “A needs assessment identifies gaps between current and desired results and places those [gaps] in priority order on the basis of the costs to ignore the needs. They also stress that the gap is the need.

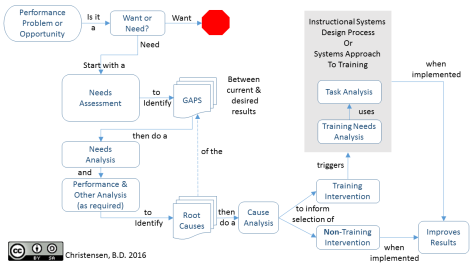

Figure 1: Needs Assessment vs. Needs Analysis Concept Map V2.0

Source adapt and taken from: